Description

This case study walks through the user research and design process of a Chrome extension that I developed, leveraging advanced image recognition technologies to create gesture-based interaction for remote video control using Chrome. I was responsible for ideating, designing, and building the experience.

| My Role | Timeline | Team Size | Techniques |

|---|---|---|---|

| UX Designer, Developer | 3-4 Weeks | 1 Person | UX Study, Chrome Extension, ML |

How the problem begins

There are many circumstances where we suffer from non-intuitive user experience, and we try to find a way to workaround it. If our hack works, we would no longer require a change. As a UI/UX person, I would like to improve those experiences. When I see my husband using a mouse to control his experience with Youtube by connecting our laptop to the TV, I know something was not intuitive enough for him. Why could not he use Chromecast? Why could he not use the Youtube TV app we installed? Thus I decided to conduct a user study to find out the user needs there.

Problem Space

Users: People who have smart TV at their home and use apps to watch videos

Context: The room with a television

Goal: Consume the media content for themselves

Task: Use TV controllers or phone to control their video play

Define user needs

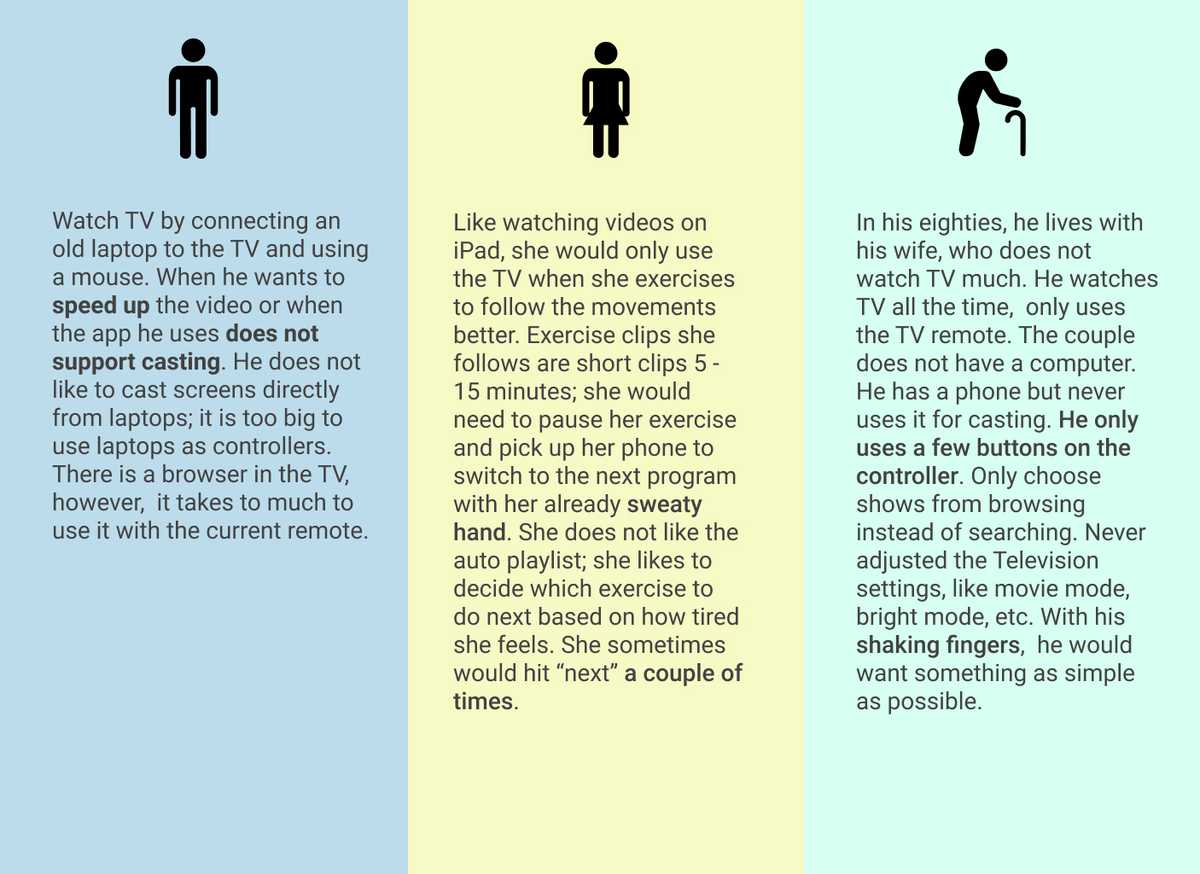

I observed users by watching how they interact with their smart TV in a natural environment and asking them questions.

How Might We

- How might we help users use a browser more easily on TV?

- How might we help users to skip videos without touching?

- How might we help users to control the TV if there is a decline in their motor ability and eye sights?

- How might we remove the intermediate control device between users and its goal?

User A and User B would need more direct controlling interaction to help them complete their current task better in an easier way. At the same time, User C needs easier controlling interaction to expand the possibilities.

Direct Manipulation

Feeling the directness of manipulating the object when controlling the TV

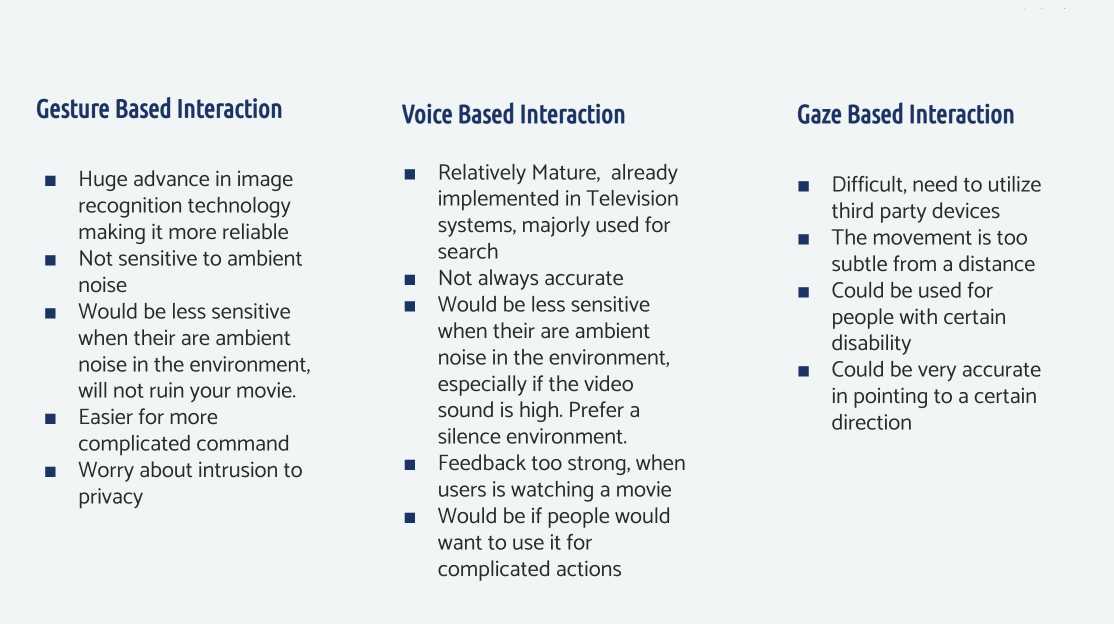

Brainstorm ideas

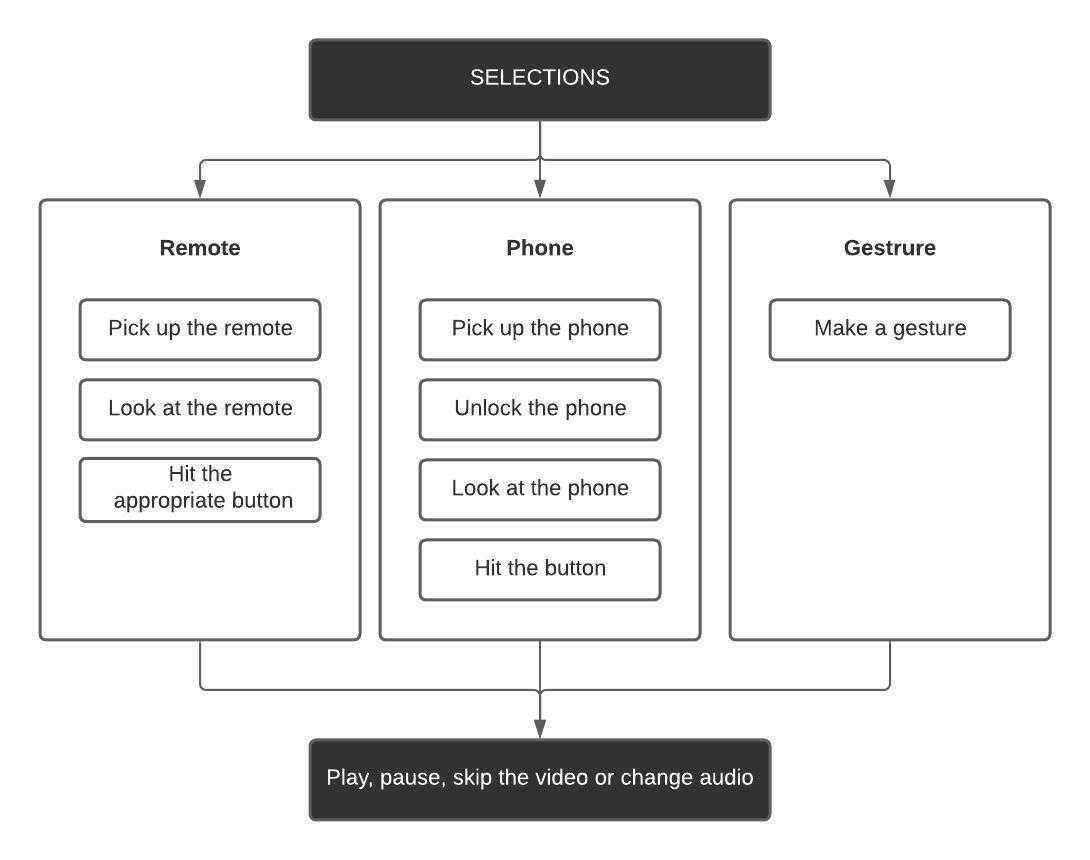

Reduced activities

Potential challenges

Solution plan

- Tensorflow lite allows compressing and installing trained models locally on a tiny device.

- Set up through just by allowing your camera.

- For the prototype, use Chrome's browser to make everything consistent.

Prototyping

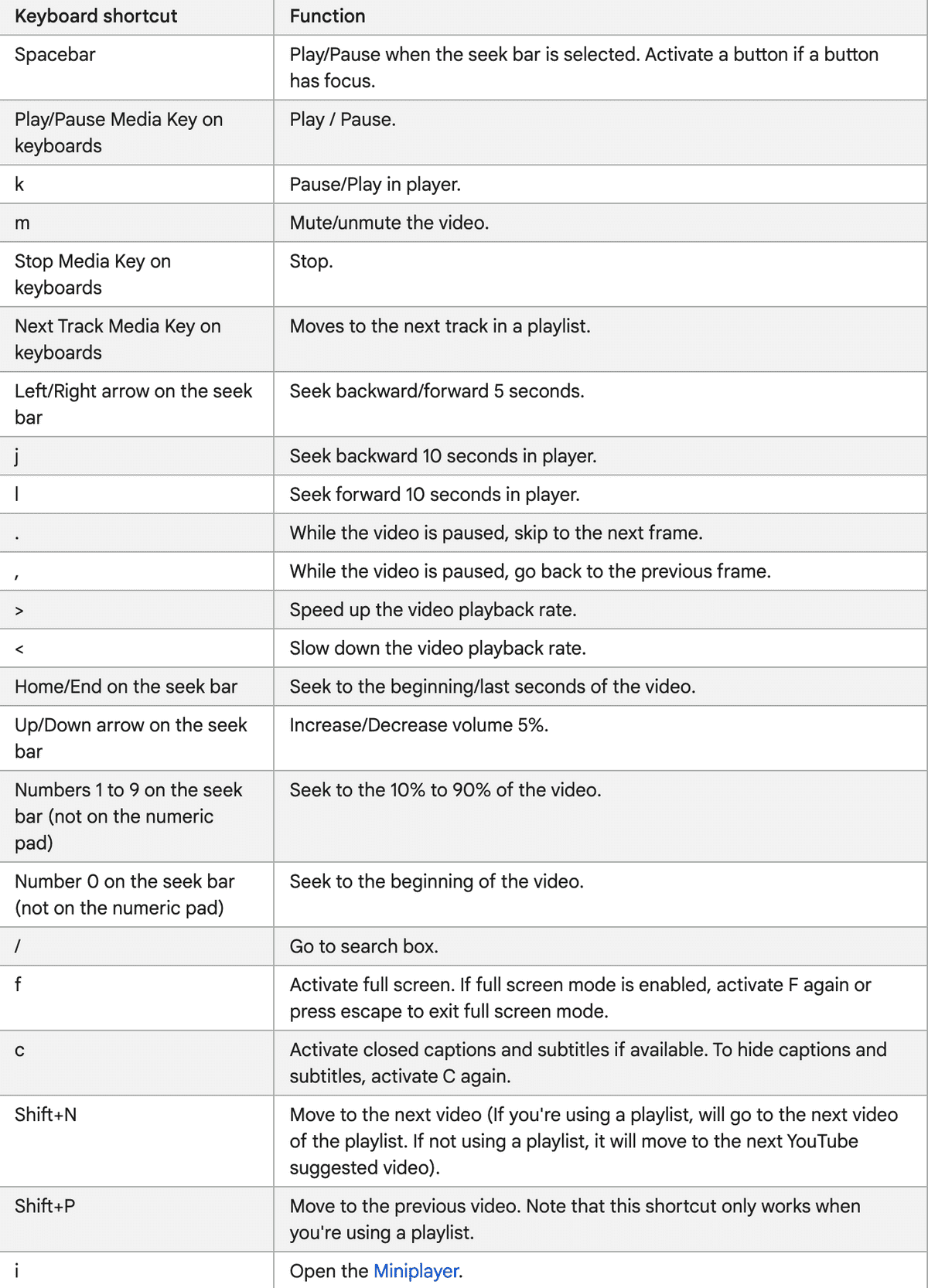

Decide command

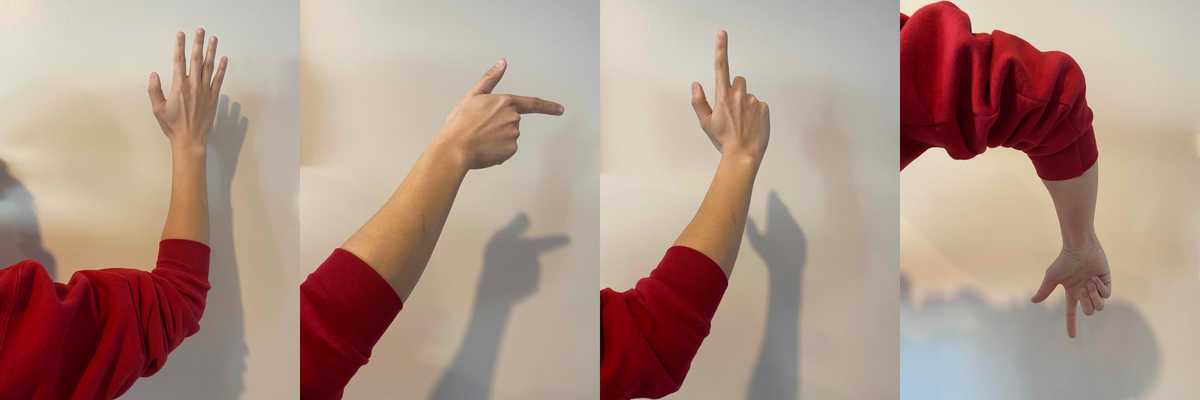

For the Machine Learning model to capture the signal more efficiently, the gestures must be very distinctive.

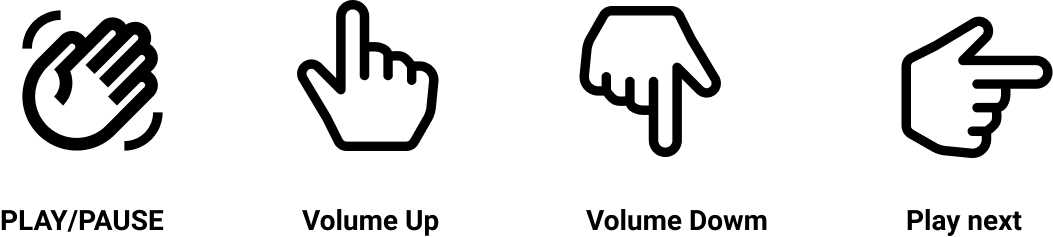

The training model

The training model was based on Tensorflow

The Mistake

Since it is a Chrome Extension, I tested my prototype with my computer rather than the TV for convenience. The model was pretty accurate in predicting my hand gestures. However, I did not consider distance. Later, when I have to control Youtube videos from a distance, the training model becomes inaccurate. Should I use body positions instead of hand gestures? If I use my whole body would like Just Dance, would it bring more fatigue.

Evaluation

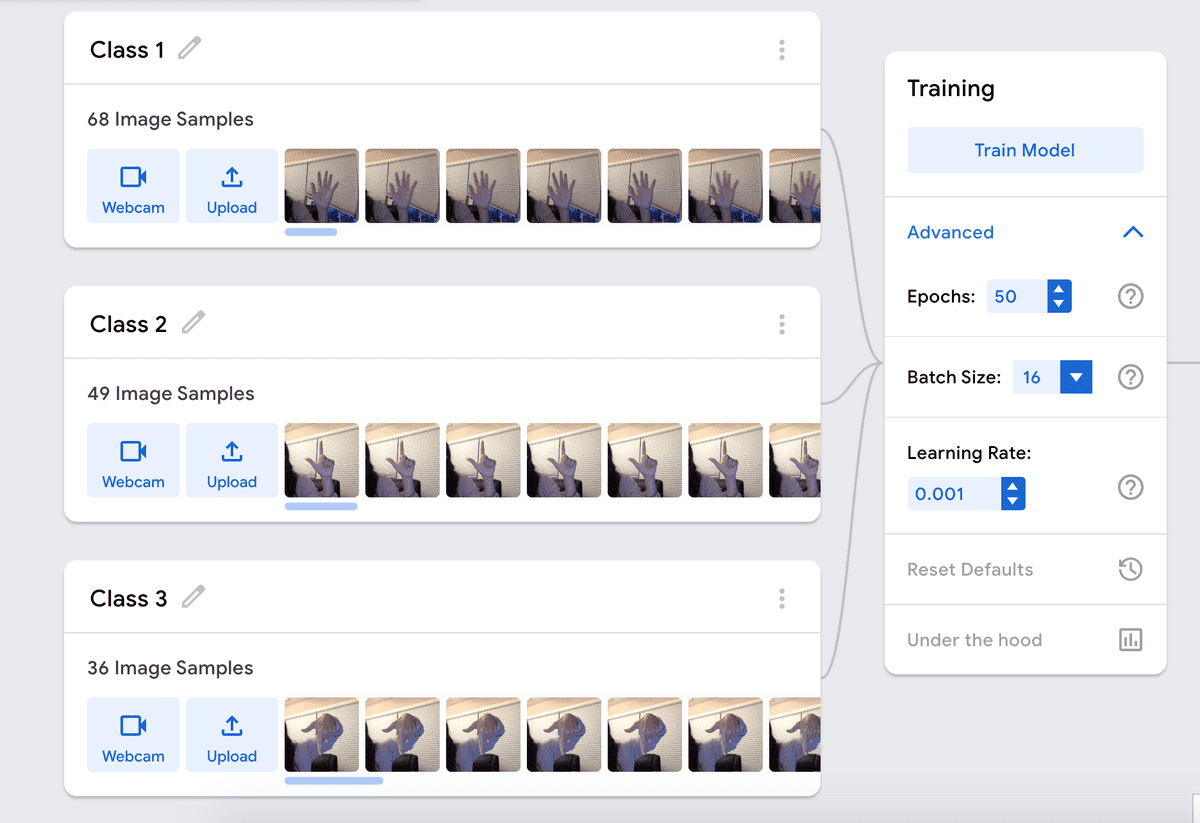

To address of my concern, I start to look into research papers. Because of the lack of UX research resources of this project, I studied papers regarding to validate my idea such as: Design and Usability Analysis of Gesture-Based Controlfor Common Desktop Tasks. Suprisingly according to this research paper, users do not prefer hand gestures to arm gestures, and arm gestures are usually more pleasant for bigger screens. So I created another model which trains arm gestures.

I also choose to evaluate the idea by using a script prototype approach- describing the experience to the users and asking them to imagine the experience. They like the idea and would like to try it if the prediction is accurate enough. I would have done a Wizard of Oz approach if time allowed.

Another approach that I could take is to use competitor analysis to gain inspiration from similar products. For example, games would be an area that uses gesture-based interactions pretty often.

Result

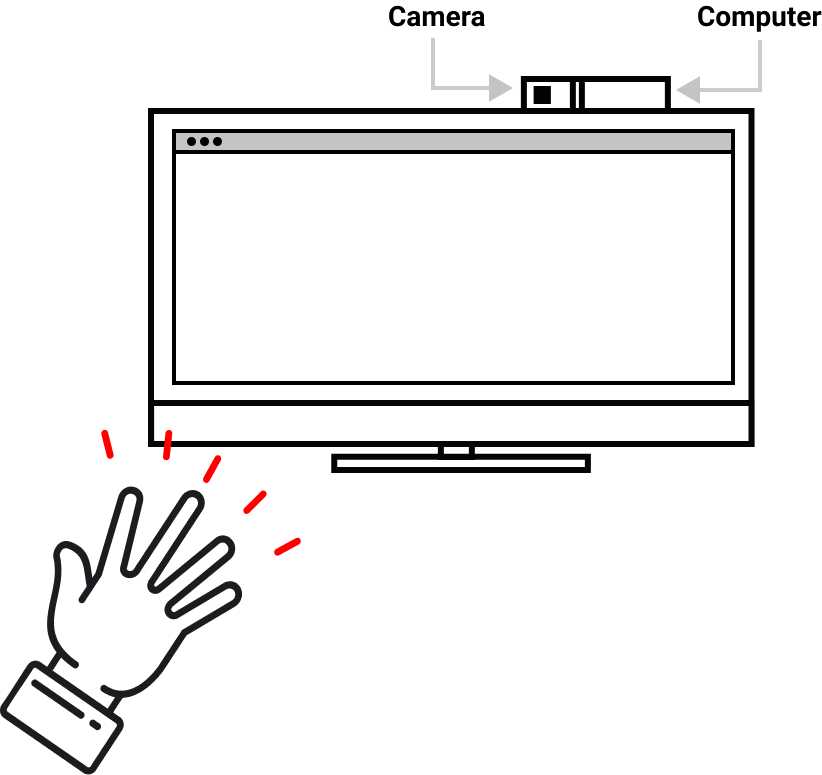

I successfully build a Chrome extension which would ask camera permission, then it captures the predefined gesture(instead of just hand, it would be combination of hand and arm), it could inject code into the browser to convert the gesture to a key command. I used my 10 year old laptop to connect with the TV, and the camera will capture my movement to control everything in the browser.

Since my training model is not very accurate, I did not add the extension to the Chrome AppStore. But I would be happy to talk through how I developed the extension in another dev article.

To improve

The old laptop itself is a giant setup, feels intrusive into the environment. And users need to open the computer and then the Chrome browser every time they want to use the function. It adds additional steps to the experience. But this experiment generates some new ideas for me. We could build a low-performance computer without a screen(something like a mini TV box), which only has the browser and a camera in it, and we can connect it with any TV to perform all different gesture capture.

Impact

- Explored ideas to minimize the gap between users and their task, removed unnecessary interfaces.

-

Explored opportunities for people with certain accessibility issue.

- Explored the potential of new hardware.

What I Achieved

- Being sensitive to issues people encounter in their life.

- Research and develop a concept with limited resource.

- Know available technologies and their constraints.